Choosing the right GCP Load Balancer

Discussion on various points to consider before deciding on a GCP load balancer for a particular task.

Google Cloud Platform offers some of the robust load balancing products out there in the Cloud Computing world. At its core, the Cloud load balancing product line gets its inspiration from serving millions of queries per second on various Google products such as Maps, Gmail, Google Ads, YouTube etc. So, picking the right load balancer and configuring it appropriately as per the workload will give you a similar performance to that of any Google product out on the market. Of course, the final experience for the user relies heavily on how the product is built, not just the load balancer or its configuration. Even if everything is setup properly with the load balancer, if your application takes 10 seconds to respond to a request, it makes the experience terrible for the user.

Assuming the product being exposed to the Internet is well built and is blazing fast to serve requests, let's focus on picking the right GCP load balancer to ensure great performance and user experience.

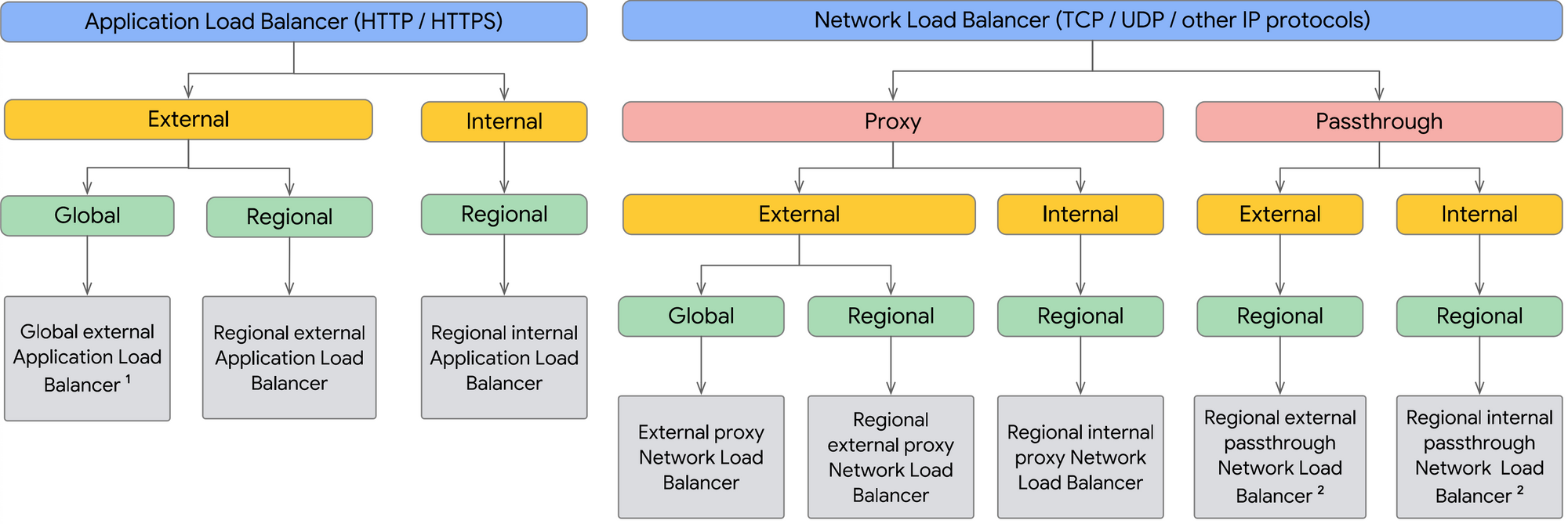

The load balancers on Google Cloud Platform are broadly classified into two types: Application Load Balancers (HTTP/HTTPS) and Network Load Balancers (TCP/UDP/Other IP Protocols). To understand which load balancing product makes sense for your application, the following aspects must be considered -

Traffic Type

Certain load balancers are built to handle a specific type of traffic. For example, the application load balancers are built to work with HTTP or HTTPS traffic. So, if you want to expose your application over HTTP or HTTPS, these are the right load balancers. If your application works over TCP or UDP or other IP protocols such as ESP, GRE, ICMP or ICMPv6, passthrough network load balancers are your go-to products. If you need TCP with optional SSL offloading capabilities, proxy network load balancer is the option you will pick.

External vs. Internal

There can be situations when you want to expose your applications to other interal consumers only via private IPs vs. exposing it to the whole world wide web. Hence, Google Cloud Platform offers load balancers for both kinds of use cases - external load balancers source traffic from the Internet and distribute it among the resources within your GCP VPC, internal load balancers source traffic from resources within your network boundary (either GCP VPCs or on-prem networks etc.) and distribute it to the applications within the same VPC or in other VPCs depending on whether VPC peering or Cloud VPN or Cloud Interconnect is used.

Global vs. Regional Load Balancers

If your backends are distributed across multiple GCP regions and your users require access to these backends, it is recommended to use a Global Load Balancer powered by a single anycast IP.

If your backends are regional in nature (meaning in one single region), or if your applications or organization is constrained by legal data residency requirements, a regional load balancer makes more sense.

Caution: It is important to note that regional outages have the potential to take out your load balancer along with them.

Proxy vs. Pass-through Load Balancers

The key difference between these two types of load balancers is that, proxy based load balancers terminate the incoming connection from clients themselves and initiates a new connection with the backends. They essentially "proxy" all requests between clients and backends. The pass-through load balancers on the other hand are simply control plane elements programmed in the software defined networking fabric of GCP and therefore doesn't terminate any client connections themselves. They pass the packets directly to the backends.

One key advantage of pass-through load balancers is that the backends get to see the true client IP addresses from the requests and be able to respond to them directly without the load balancer in between them. In case of proxy based load balancers, the backends would see the IP of the Google-Front-End (GFE) or Envoy proxies that are in between the client and backends. The application on the backends can however look for X-Forwarded-For HTTP header and obtain true client IP. This requires some application code re-work.

Premium vs. Standard Network Tiers

If the IP address of the load balancer is in the premium tier, the traffic essentially traverses Google's premium global backbone as long as possible and only cross the boundary at the closest point to the client, no matter which region is the home of the load balancer itself. This means the premium tier traffic isn't subjected to the same uncertainties of normal Internet traffic. There are certain optimizations and guarantees in place with regards to latency and packet loss.

On the contrary, standard tier is like hot-potato routing. Google will try to hand off and accept traffic for standard tier IPs closest to the region where the load balancer is configured. This means the traffic has to traverse multiple intermediary networks just like any other Internet traffic and is subjected to the same latency and packet loss factors as any ordinary traffic.

Premium tier is more expensive than standard tier. So, when budgeting for load balancers, this needs to be taken into consideration. Certain load balancer types can only be premium tier and without proper planning, this throws a wrench in the project financials, especially when large traffic volumes are expected.

Below is a flow chart to help you pick the right load balancer for the task -