Let resources across VPCs talk!

In this article, we explore ways in which communication between multiple GCP VPC networks can be achieved using VPC Peering, Cloud VPN or Multi-NIC VMs.

As your presence in Google Cloud Platform grows, you will often find yourself with more than one VPC. In fact, there will most likely be several of them - separate VPCs for various environments like Prod, Dev, UAT, Staging etc. With this kind of a sprawl, there comes situations when a certain resource in, let's say, Dev environment VPC needs to talk to a resource in Staging environment VPC. This article goes about discussing various options to achieve this communication.

There are three main ways to accomplish this connectivity between different VPCs -

- VPC Peering

- Cloud VPNs

- Multi-NIC Appliances

VPC Peering

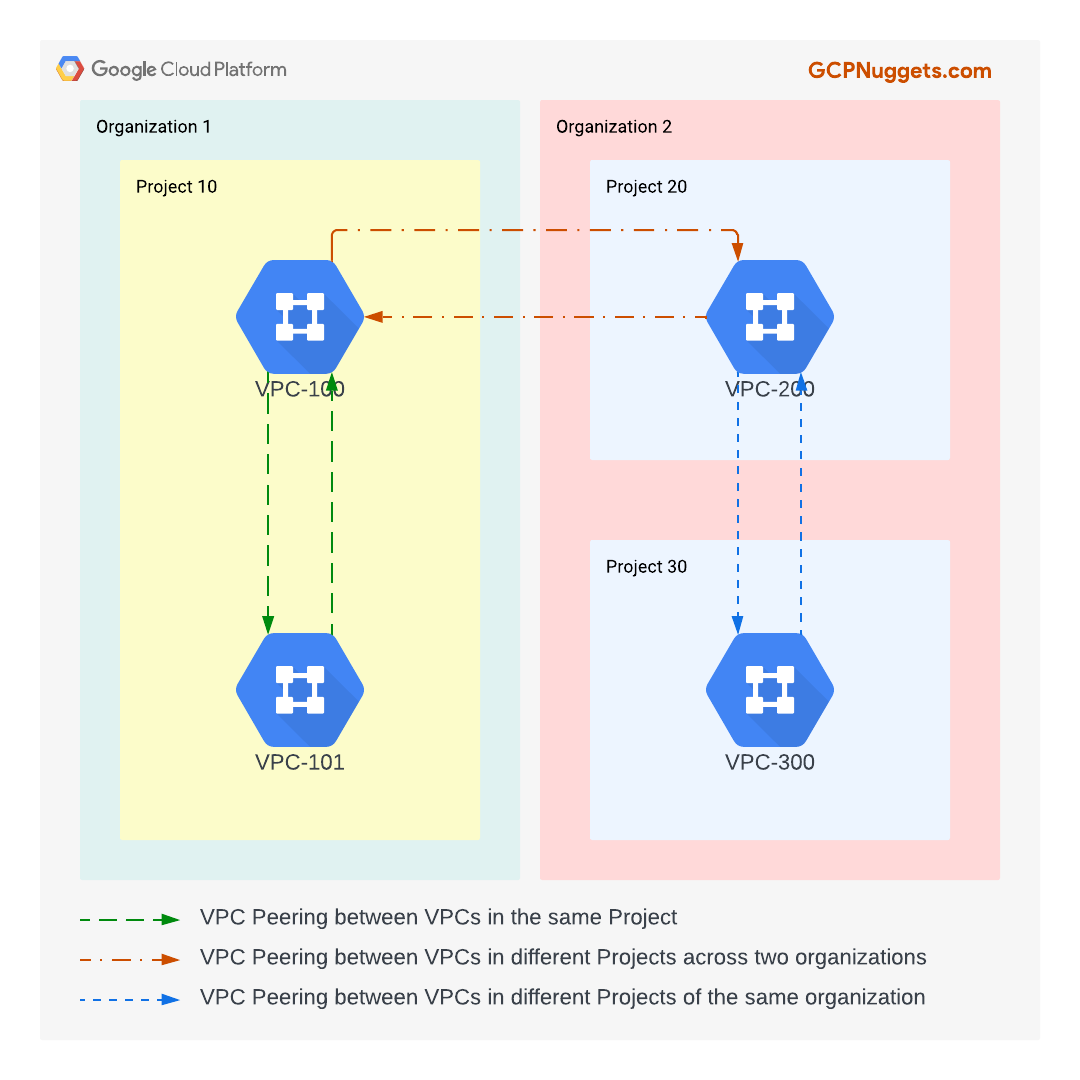

This is the easiest and most performant way to connect two VPCs in GCP. The beauty of this feature is that these two VPCs that you are trying to peer with one another need not be in the same project or even the same organization. Consider the scenario where your organization has recently acquired a different organization and you want to get both of these GCP environments connected. VPC Peering is the answer! Note that there are some caveats to this solution (discussed below).

Pros

- Simple cloud native way to establish plumbing between two VPCs.

- No performance or latency hit as VPC peering is an underlay networking concept and doesn't really involve any physical devices to achieve this.

- Great IaC support. You can get this done easily using Terraform.

- No additional cost associated with VPC Peering.

Cons

- There cannot be any IP address overlaps between the peered networks.

- Once VPC Peering is established, the subnet routes in each VPC are exchanged with one another, hence the requirement of no overlaps.

- No control over subnet route exchange.

- All subnet routes get exchanged, no exceptions.

- Once VPC peered, the quotas and limits start applying to the peering group as a whole.

- The size of peering group is defined as the number of VPCs that are peered together and is always looked at from the point of view of a single VPC.

From the above picture, the peering group size in the context of VPC-200 is three. The peering group size in the context of VPC-101 is two.

- VPC Peering is not transitive.

- Let's say, VPC-A is peered with VPC-B which is peered with VPC-C. Resources in VPC-A cannot reach resources in VPC-C.

- The reason for this is that peering routes (routes learned by a VPC due to a VPC peering relation with another VPC) are not exchanged in the VPC Peering process. Therefore, in the context of VPC peering between VPC-B and VPC-C, the latter doesn't learn about VPC-A's routes learned by VPC-B.

Cloud VPN

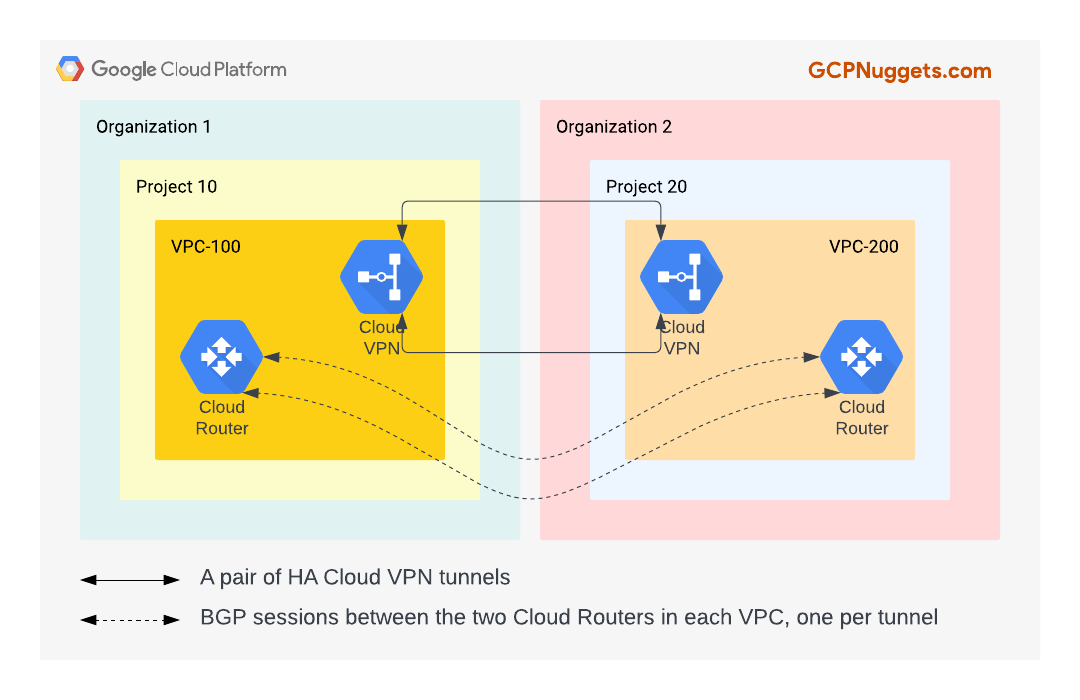

To work around the cons of VPC peering, particularly those surrounding the inability to control the routes exchanged and lack of transitivity with VPC peering, we use Cloud VPN tunnels.

Pros

- Used in conjunction with Cloud Router BGP advertisements, you can precisely control what routes get exchanged.

- Since Cloud Router BGP sessions can exchange both subnet and dynamic learned routes, the issue of transitive peering no longer exists.

- Cloud-native solution, just like VPC Peering.

Cons

- Each VPN tunnel can only support up to 250,000 packets per second. This means that the tunnel capacity is between 1Gbps and 3Gbps depending on the per packet size.

- If you need more bandwidth than this, you will have to create more tunnels between the VPCs.

- The costs incurred can rise significantly since Cloud VPN and Cloud Router and not free.

- It is also relatively complicated to setup and maintain compared to VPC Peering, because of all the knobs and switches you can play around with.

Note: both VPC Peering and Cloud VPN solutions require you to have controls in place for security aspects at GCP Firewall and/or policies layer.

Multi-NIC Appliances

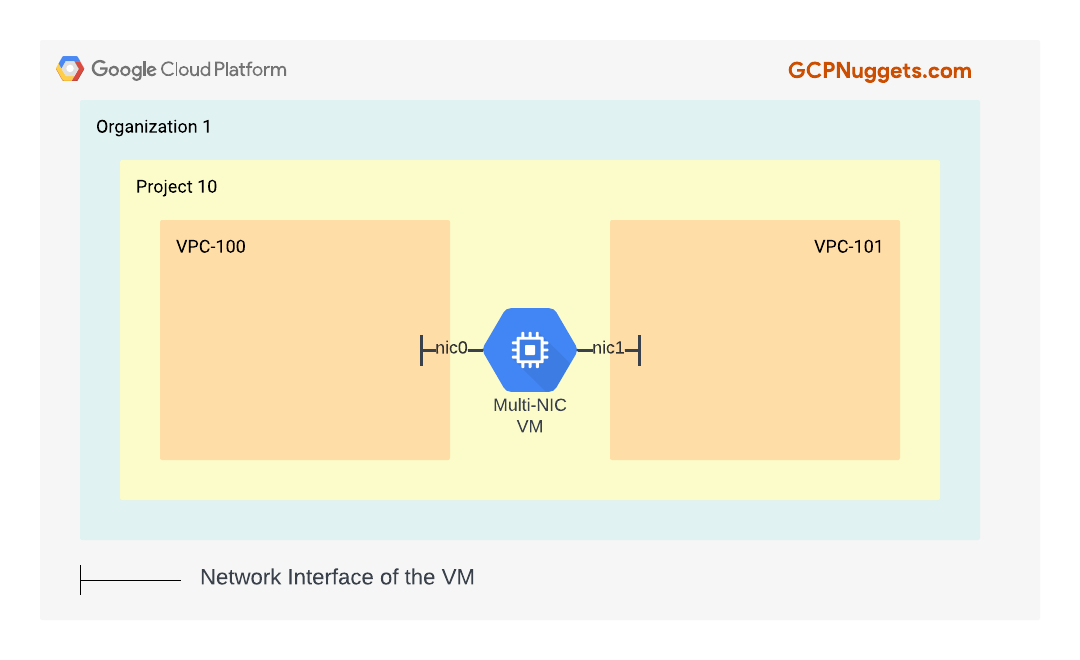

This is the most complicated setup among the three options. It involves a non-cloud-native VM/appliance acting as a bridge between the two VPCs. Think of it like a router-on-a-stick. This VM will have two or more network interfaces, one in each VPC that you are trying to facilitate communication with. Certain thid-party appliances from GCP Marketplace such as Palo Alto NGFW, Fortinet Firewalls etc., also perform this function very well, in addition to all of their other security related capabilities.

Pros

- This is a preferred approach for many medium to large enterprises due to security requirements.

- Think of reasons like deep packet inspection requirement for sensitive workloads.

- Super fine control on which resources can talk to what across VPCs.

Cons

- Not cloud-native.

- Added cost of VM instances running this software (be it iptables or appliance software) in addition to the license cost itself if using proprietary software.

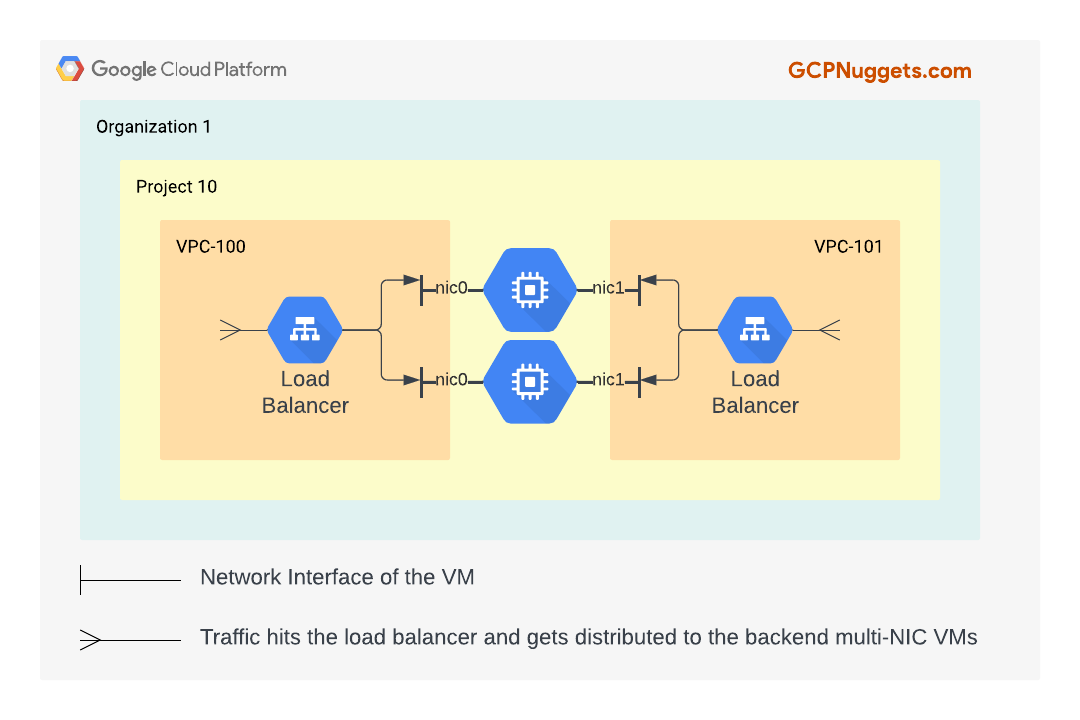

- Scaling constraints: not all software based solutions can be deployed in a managed instance group to autoscale with bandwidth demand.

- Besides, scaling === more cost of resources running the software.

- If you have a group of multi-NIC VMs acting as a bridge between VPCs, you will need to sandwich them between load balancers and this means additional costs incurred.

- All the network interfaces of the multi-NIC VM must be in subnets of different VPCs of the same project.

- You cannot use multi-NIC VMs to connect VPCs from two different projects together.

- In GCP, a multi-NIC VM can have up to eight network interfaces.

- This means, at most 8 VPCs can be connected together.

- You cannot add or remove network interfaces to an existing VM.

- Therefore, you need to make up your mind and plan for the number of network interfaces you are going to have on your multi-NIC VM.

Ultimately, the decision to use one vs. the other option depends heavily on the workloads being deployed, security requirements and other network dependencies. It can be a combination of one or more of these features.